The night sky, a canvas of distant suns and enigmatic nebulae, has captivated human curiosity for millennia. Early astronomers, armed with little more than their eyes, keen observational skills, and rudimentary tools, painstakingly charted the heavens. Imagine Johannes Kepler, dedicating years to deciphering Tycho Brahe’s meticulous planetary observations, performing endless calculations by hand to formulate his laws of planetary motion. Or picture the “human computers” of the 19th and early 20th centuries, teams of individuals, often women, meticulously performing arithmetic operations to calculate ephemerides or reduce astronomical data. This was an era where a single complex calculation could consume months, even years, and the margin for error was a constant companion. The sheer computational burden limited the scope and complexity of the questions astronomers could realistically tackle.

The Dawn of Electronic Brains

The arrival of the first electronic computers in the mid-20th century marked a profound turning point. Machines like ENIAC and EDSAC, initially behemoths filling entire rooms, offered a glimpse of a future where calculations could be performed at speeds previously unimaginable. Early astronomical applications naturally gravitated towards problems bottlenecked by arithmetic: orbital mechanics was a prime candidate. Calculating the precise trajectories of planets, moons, comets, and, later, artificial satellites, became dramatically faster and more accurate. What once took weeks of manual effort could be accomplished in hours, then minutes. This wasn’t just a quantitative leap; it began to change the qualitative nature of astronomical research. The reduced risk of simple arithmetic errors in long calculations also meant greater reliability in the results, allowing scientists to trust their foundations more firmly as they built more elaborate theories.

Unlocking Complexity: The Power of Simulation

Beyond merely accelerating existing types of calculations, computers opened the door to an entirely new methodology: numerical simulation. Astronomers could now create virtual laboratories to test theories and explore scenarios far beyond the reach of direct observation or analytical solutions. This ability to model dynamic, evolving systems has revolutionized nearly every subfield of astrophysics.

Peering into Stellar Nurseries and Graves

The life cycle of a star, from its birth in a collapsing gas cloud to its eventual demise as a white dwarf, neutron star, or black hole, is a complex interplay of gravity, nuclear physics, and fluid dynamics. Computers allow astrophysicists to build intricate stellar evolution models that track these processes over millions or billions of years. These models can simulate the changing temperature, pressure, luminosity, and chemical composition within a star, predicting how different mass stars will evolve and what elements they will synthesize and eventually disperse into the cosmos. Without computational models, our understanding of phenomena like supernovae or the formation of heavy elements would be vastly more speculative. We can now simulate the internal convection currents, magnetic field generation, and mass loss events that characterize a star’s life, comparing these simulated outputs to observed stellar populations.

Sculpting Galaxies

Galaxies, vast collections of stars, gas, dust, and the enigmatic dark matter, are not static entities. They grow, merge, and change shape over cosmic eons. Understanding their formation and evolution requires grappling with the gravitational interactions of billions of objects. This is the realm of N-body simulations. Early simulations might have tracked a few hundred particles; today, supercomputers can handle billions, representing stars, gas clouds, and dark matter halos. These simulations have been crucial in demonstrating the pivotal role of dark matter in providing the gravitational scaffolding for galaxy formation. They allow us to witness virtual galaxy mergers, observing how tidal forces strip stars and gas into dramatic streams, and how such events can trigger bursts of star formation or fuel supermassive black holes at galactic centers. The stunning spiral arms, elliptical bulges, and irregular forms we observe in the universe can be reproduced and understood through these computational experiments.

The Cosmic Web Unveiled

On the grandest scales, galaxies are not distributed randomly but are arranged in a vast, interconnected structure known as the cosmic web, comprising clusters, filaments, and vast empty voids. Cosmological simulations, often starting from initial conditions derived from observations of the cosmic microwave background radiation, model the evolution of matter under gravity over billions of years. These simulations, like the famous Millennium Simulation or the IllustrisTNG project, have been instrumental in showing how tiny density fluctuations in the early universe could grow into the complex structures we see today. They incorporate not just gravity but also gas dynamics, star formation, and feedback processes from supernovae and active galactic nuclei, providing a comprehensive picture of cosmic structure formation. The statistical properties of these simulated universes can then be directly compared with large-scale galaxy surveys, testing our cosmological models with increasing precision.

Computational astrophysics is now an indispensable pillar of modern astronomy, standing alongside observational and theoretical astronomy. The synergy between these branches allows for unprecedented insights into the universe. Without advanced computing, our understanding of cosmic evolution, from planet formation to the large-scale structure of the cosmos, would be drastically limited and far more theoretical.

The Data Deluge and Computational Lifelines

Parallel to the rise of computational modeling, astronomical instrumentation has also undergone a revolution, much of it computer-driven. Modern telescopes, whether ground-based radio arrays like the Atacama Large Millimeter/submillimeter Array (ALMA) or spaceborne observatories like the Hubble Space Telescope and the James Webb Space Telescope (JWST), generate staggering volumes of data. We’re talking terabytes, even petabytes, from a single observing run or survey. Handling this data deluge would be impossible without sophisticated computational infrastructure. Automated pipelines process raw data, performing calibration, removing instrumental signatures, and identifying sources. Complex algorithms are used for image reconstruction, especially in interferometry where data from multiple antennas must be combined to create a single high-resolution image. Moreover, vast digital archives, accessible globally, store this precious data, allowing astronomers worldwide to conduct research long after the initial observations are made. The very ability to conduct large sky surveys, cataloging millions or billions of objects, is predicated on advanced data processing and management capabilities.

Computational Discovery and Verification

Computers are not just for crunching numbers or managing data; they are tools for discovery. Simulations can predict phenomena before they are observed, guiding observational campaigns. For example, models of accretion disks around black holes predicted specific observational signatures that astronomers then sought. The detection of gravitational waves by LIGO and Virgo was a triumph not just of experimental physics but also of computational astrophysics. Identifying the faint chirps of merging black holes or neutron stars from noisy detector data required matching the signals against a vast library of pre-computed waveform templates, themselves generated by complex numerical relativity simulations. Furthermore, computation is vital for theory verification. If a new theory of, say, dark energy is proposed, it can be implemented in cosmological simulations. If the simulated universe evolves in a way that matches observations, it lends credence to the theory; if not, the theory may need revision or abandonment.

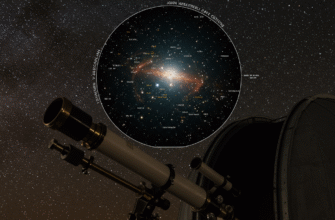

Visualization: Seeing the Unseeable

The outputs of complex simulations or large datasets are often just massive arrays of numbers, inherently difficult for the human mind to grasp. Computational visualization techniques transform this raw information into intuitive, often breathtaking, images and animations. We can now fly through simulated universes, watch galaxies collide in three dimensions, or visualize the intricate dance of gas around a forming star. These visualizations are not just pretty pictures; they are crucial tools for understanding complex physical processes, identifying unexpected patterns, and communicating scientific results to both peers and the public. They allow us to “see” phenomena that are too vast, too slow, too fast, or too distant to perceive directly.

The Modern Computational Frontier

The evolution of computing in astronomy continues unabated. Today’s research often relies on high-performance supercomputers, capable of petaflops (quadrillions of calculations per second) and soon exaflops. These machines enable simulations of unprecedented scale and fidelity. Distributed computing projects, like Einstein@Home, harness the idle processing power of countless personal computers around the world to search for pulsars or gravitational waves. And increasingly, machine learning and artificial intelligence (AI) are making their mark. AI algorithms are being trained to classify galaxies, identify transient events in real-time data streams, sift through petabytes of data for rare objects, and even optimize observing schedules for telescopes. There’s also exciting research into using AI to accelerate simulations themselves, creating “surrogate models” that can approximate the results of complex calculations much faster. The journey from manual sums to AI-driven discovery has been remarkable, and as computational power continues to grow, astronomers will undoubtedly peer even deeper into the cosmos, asking, and perhaps answering, questions we can currently only dream of.